One of the frustrations of the Labour NEC elections is that you never really know who you’re voting for. Well, obviously you known their names, and they’re happy to give you an impressive list of the Committees they have been elected to (and they are always Committees, not committees).

One of the frustrations of the Labour NEC elections is that you never really know who you’re voting for. Well, obviously you known their names, and they’re happy to give you an impressive list of the Committees they have been elected to (and they are always Committees, not committees).

But what is their stance? Who do they support? Are they Traitorous Blairite Scum™ or Vile Entryist Trots®? How do we know? No one actually says: “I’m backing Eagle. She’s great” or “Corbyn for the Win!”. So we’re left guessing, or poring through their candidate statements, or worse still, relying on the various lobby groups to give us a clue to the slates etc.

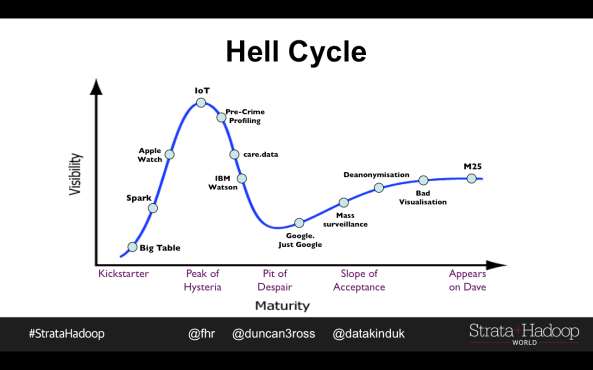

So, instead of that, because, frankly, it’s boring, I decide to do a little bit of word frequency analysis, to see if there were words that were unique, or at least more likely to come up in one group or another.

To help me categorise the candidates, I followed a helpful guide from Totnes Labour Party. That gave me the two major slates: Progress/Labour First*** vs the something something Grassroots Alliance***.

So, all I need to do is go to the Labour Party site, download the candidate statements, and away I go. Except, of course, the Party website is bloody useless at giving actual information.

So once more it’s back to the Totnes Labour website, supported by Google. I can’t find statements from every candidate, but I get most of them. Enough for this, anyway.

Normally I would fire up R, load the data, and away I go. But actually there is so little of it that it’s time for some simple Excel kludging.

Methodology*****

First I put all the text from each slate into a single document. Remove case sensitivity, and work out the frequency of each word (with common stop words, natch).

I know that this means I can’t play with tf-idf (sorry, Chris), but really there weren’t enough documents to call this a corpus anyway.

Now I create a common list of words, with the frequency in each slate. This isn’t as easy as it sounds, because a straightforward stemming approach isn’t really going to cut it. These documents are in SocialistSpeak (Centre-left social democratic/democratic socialist sub variant), and so need handcrafting. There is, for example, a world of difference between inclusive and inclusion.

So once that tedious task is out of the way, I took the frequency with which each word appeared in each slate, and simply subtracted one from the other.

Results

Firstly, which are the most frequently used words across both slates?

| Word |

Avg Freq |

| MEMBER |

2.7% |

| LABOUR |

2.5% |

| PARTY |

2.3% |

| NEC |

1.5% |

| WORK |

1.1% |

| NEED |

1.1% |

| ELECTION |

1.1% |

| POLICY |

0.9% |

| SUPPORT |

0.9% |

| YEARS |

0.9% |

| WIN |

0.8% |

| CLP |

0.8% |

| CAMPAIGN |

0.8% |

| PEOPLE |

0.7% |

| WILL |

0.7% |

| GOVERNMENT |

0.7% |

| POLITICS |

0.7% |

| WALES |

0.6% |

| NATIONAL |

0.6% |

| JEREMY |

0.6% |

These words appear frequently in both camps. You can easily imagine statements containing them:

“Since I was elected to the NEC in 2010 I have spent six years supporting members of the Labour Party. Sadly Jeremy can’t win in Wales/Only Jeremy can win an election against this Government”

I don’t really know why Wales pops up.

But let’s look at each slate independently.

Team M

Firstly, Team Momentum****** (or the Centre-Left Grassroots Alliance (It isn’t clear where the apostrophe should go (or indeed, if there should be one))).

These are the words most likely to be in a Momentum candidate’s statement and not in a Progress candidate’s statement, in order of momentum (sorry, couldn’t resist):

| WALES |

1.2% |

| JEREMY |

0.9% |

| YEARS |

1.2% |

| PUBLIC |

0.9% |

| COMMITMENT |

0.6% |

| LONDON |

0.8% |

| SERVICES |

0.7% |

| NATIONAL |

0.9% |

| CLP |

1.1% |

| LABOUR |

2.7% |

| ELECTION |

1.3% |

| EQUALITY |

0.5% |

| JOBS |

0.5% |

| COMMITTEE |

0.6% |

| SECRETARY |

0.4% |

| WORK |

1.3% |

| CLEAR |

0.4% |

| BELIEVE |

0.6% |

| UNION |

0.7% |

| GIVE |

0.4% |

I think we’ve found where Wales comes from: a proud Welsh candidate on the Momentum slate.

They are proud of Jeremy, are probably active in their union, have been a party secretary.

Team P/L1

How about Team Progress? (Or the less impressively websited Labour First)

| NEED |

1.7% |

| MEMBER |

3.3% |

| PEOPLE |

1.1% |

| WILL |

1.1% |

| NEC |

1.8% |

| WIN |

1.2% |

| PARTY |

2.6% |

| COUNTRY |

0.6% |

| THINK |

0.6% |

| FIGHT |

0.7% |

| PUT |

0.7% |

| LOCAL |

0.8% |

| LISTEN |

0.5% |

| VOTE |

0.4% |

| NEW |

0.7% |

| DECISIONS |

0.3% |

| ENGAGE |

0.3% |

| MEAN |

0.3% |

| WELCOME |

0.3% |

| POLITICS |

0.8% |

Woah! That looks very different. Without wanting to judge either group’s politics, one looks (at first) like a very technocratic list of achievements, and the other a very wide forward looking approach. This may simply be reflecting the fact that the Leader of the Party is on the Momentum side, so they have more things to look at and say “this is our record” where as the opposition have to look to a future.

Team WTF?

Are there any oddities?

Well, Blair and Brown (and Iraq) are mentioned by Progress, and not by Momentum, which is perhaps strange. Afghanistan is mentioned by Momentum.

Britain, England, and Scotland are mentioned far more by Momentum (as is Wales, but let’s ignore that for now).

Everyone is claiming to be a socialist. But not very much. (There was one use of socialist on each side. Of course maybe everyone assumes that everyone else knows they are a socialist).

Privatisation is mentioned by Momentum, renationalisation by Progress.

The word used most by Momentum but not at all by Progress is commitment.

The words used most by Progress and not at all by Momentum are country and think (it’s a tie).

But surely…?

What should I really have done to make this a proper analysis?

- Firstly I should have got all the data.

- Then I should probably have thought about some more systematic stemming, and looking at n-grams (there is a huge difference between Labour and New Labour).

There are a whole range of interesting things you can do with text analysis, or there are word clouds.

Footnotes

*Very brief

**Only just, but more later!

***And this is why there is a problem. No one wants to call themselves: No Progress at All, or Labour Last, and everyone thinks that they represent the grassroots. I’ve yet to see a group that markets itself as the home of the elitist intellectuals****

****Well maybe the Fabians. But I didn’t say it.

*****This seems to over dignify it. But heck.

******They deny this.

One of the frustrations of the Labour NEC elections is that you never really know who you’re voting for. Well, obviously you known their names, and they’re happy to give you an impressive list of the Committees they have been elected to (and they are always Committees, not committees).

One of the frustrations of the Labour NEC elections is that you never really know who you’re voting for. Well, obviously you known their names, and they’re happy to give you an impressive list of the Committees they have been elected to (and they are always Committees, not committees).